Welcome to the second vocabotics AI newsletter. It’s been another busy week in world of AI thanks to CES. Many of the big (and small) names chose this prestigious event to launch their latest tech. It’s only the second week of 2024, yet dozens of amazing products have appeared. Expand the sections below to read more.

Hardware

rabbit r1

First up is the rabbit r1. Described as a pocket companion this nifty little device uses AI to assist the owner with the daily tasks in their lives. rabbit os makes use of a Large Action Model (LAM). rabbit describe the LAM as “a new type of foundation model that understands human intentions on computers. Put simply rabbit os listens to what the user says then gets it done.

The device packs in a lot tech for the very sweet price of just $199. At its heart sits a 2.3GHz Mediatek P35 processor with 4GB RAM and 128GB of flash. Connectivity is provided through Wi-Fi and LTE, and charging is through USB-C. The makers claim the battery will last all day. A 2.88 inch touchscreen is combined with a scroll wheel and a single push-to-talk button on the side. The 360-degree rotating camera allows you to use it both as a front and rear facing camera. When not in use the camera can rotate to an intermediate position to ensure privacy.

For a device that few had contemplated needing, traction has been incredible. The founders were expecting to sell around 500 units following the show but in fact sold 10,000 units within the first 24 hours, and 30,000 by the weekend. The low price, Apple style keynote, combined with iconic design from teenage engineering, and a great attitude from the founders has surely made this the hottest AI device of 2024 so far. How useful it turns out to be in real life, only time will tell.

The closest competitor in this arena is the hu.ma.ne ai pin. At over three times the price at $699 and with a much more limited user interface, the r1 is sure to give the ai pin a run for its money.

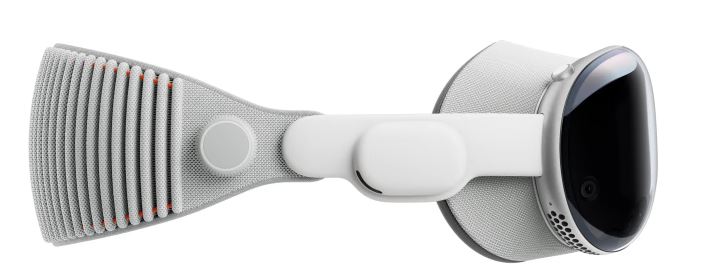

Apple Pro Vision Pro

I don’t know if it’s just me, but I keep calling it the Pro Vision. Anyway, the big news of the week is it’s finally arriving. Pre-order begins on 19 January at 5.00 a.m. PT. with availability starting on 2 February. As always, American customers will be first in the world to gain access to this highly anticipated device.

As a quick reminder this device packs in serious horsepower with an M2 processor assisted by an R1 co-processor. 12 cameras, 6 microphones, and 5 other sensors are also squeezed in. The highlight of the device for many though will be the two 1.41-inch micro-OLED displays with 23 megapixels at 90 FPS. For many the images displayed inside this device will be beyond anything they have witnessed before. Battery life is 2 hours. But it doesn’t come cheap, starting at $3,499 with 256GB storage.

Celestron Origin

As an avid amateur astronomer, the Celestron Origin piques an interest. Combining a 6″ Rowe-Ackermann Scmidt Astrograph with a 0.35″ 6.44MP Sony IMX178LQJ, a Rasberry Pi and a dash of AI, Celestron have the potential to revolutionize amateur astronomy.

The use of a Rasberry Pi is particularly interesting as it opens up the possibility of custom development and scope control. Let’s hope it hasn’t been locked down too much.

In the past, photographing deep space objects at home would require many hours of setup, alignment, waiting for the weather, and image capture in often freezing conditions. Followed by almost as long stacking, processing, and finishing the images. The Celestron Origin looks to solve many of these issues. The real question will be, how much of the image it produces is what you saw that night, and how much has AI filled in? Either way, this telescope is sure to inspire the next generation of young astronomers.

Samsung Ballie

Another fun device launched at CES was the Samsung Ballie, this cute little robotic ball roams around the house with a built in projector. Depending on what it is interacting with, it can project to the walls, floor, or ceiling. Example use cases highlighted in the launch video include watching movies, playing with the pet dog, and projecting a beautiful starscape on the ceiling at night.

Swarovski Optik AX Visio

Another niche device being given AI superpowers is the Swarovski Optik AX Visio binoculars. These specific binoculars are designed for bird watchers, and contain a built in camera and AI model to identify the birds being observed. I don’t know much about the world of twitching, but I’m pretty sure identifying the birds using your memory is part of the fun of it.

With a starting price of $4,799 you may want to start saving up now to give it as Christmas present. More details here.

Baracoda BMind mental wellness mirror

AI is literally being squeezed into everything this week. Next up is the BMind mirror. This circular bathroom mirror uses CareOS to provide personalised recommendations and experiences based on the viewer’s mental state. While it sounds a little out there, mental health is a serious issue. If this mirror brings just a little help to its users, then it can’t be a bad thing. Let’s just hope it remains aligned and doesn’t morph into The Magic Mirror from Snow White.

Software & Models

GPT Store

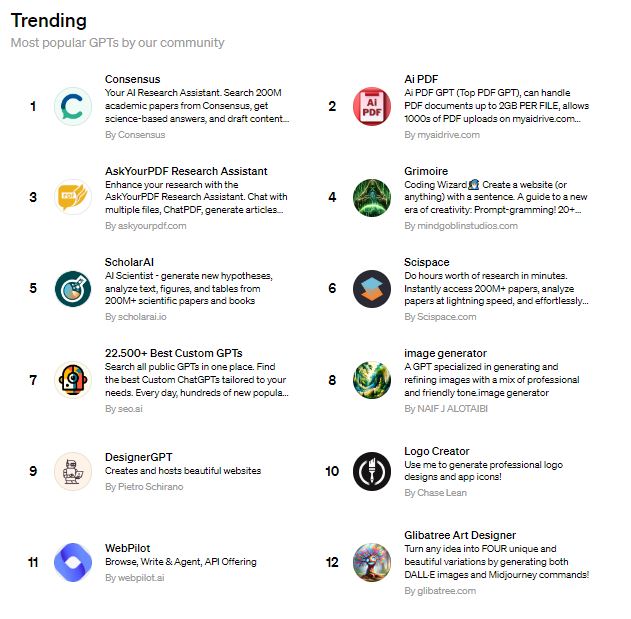

Excitement about the GPT Store has been brewing ever since the launch of GPT’s in early November 2023. It’s slightly mind boggling to think that was just 8 weeks ago and in the meantime Sam Altman has managed to get fired, poached, re-hired, and married! By launch day itself there were said to be over 3m GPT’s in the store. In comparison to some of the third party GPT stores (that magically appeared within hours of GPTs being launched) the official GPT Store is still very basic. There are limited categories, and no obvious ranking/rating system at present. Those that are trending appear to have had significant exposure in advance of the store launching.

GPTs themselves are incredibly easy to create, but are still fairly limited in their functionality. The most popular GPTs are currently those that are connected to interesting and useful back-ends through the API actions mechanism. As of the 12th January 2024, the top 12 trending GPT’s were:

The big question everyone is asking though is how and when will they be monetized? So far there is limited information available on this subject, but the suggestion in the OpenAI blog is that creators will be rewarded based on the usage of their GPT, and that the system will be rolled out to creators in the USA first.

GPT’s are a great way to try out a new idea or concept before investing significantly in a new product. One of the great features of GPT’s is the multi-modal nature of the model. For example in this simple example – “Clean My Room” created by vocabotics, an image of a messy room can be analysed and instructions provided on how to clean it up. In “Missing Ingredients” can you take a photo of your pantry/fridge along with a photo of a some food you would like to eat and it will generate a recipe and shopping list for the missing ingedients. Just remember you currently need a ChatGPT+ subscription to access the GPT store, however there have been rumours this may change.

As with the rabbit r1 there has been a lot of hype around GPTs this week. Will it last?

Meta Audiobox

Meta seems to be churning out new models on an almost weekly basis. One of this weeks goodies was the release of Audiobox, their new foundation research model for audio generation. This noisy model is capable of producing voices and sound effects through a combination of voice and natural language text prompts. This allows users to create custom speech and sounds for any purpose.

You can experience Audiobox here.

DreamGaussian4D: Generative 4D Gaussian Splatting

The DreamGaussian4D paper from the University of Michigan introduces advances in the field of 4D (moving 3D) content generation. By enabling faster, more detailed, and more controllable generation of 3D models with motion, it should greatly improve the realism and interactivity in virtual worlds. You can read the full paper here.

Alibaba Infinite-LLM & 3D Faces

In news from China, a paper has been published by Alibaba’s research team have been working on Infitine-LLM: Efficient LLM Service for Long Context with DistAttention and Distributed KVCache. Read the paper on Hugging Face.

They have also been busy developing “Make-A-Character: High Quality Text-to-3D Character Generation within Minutes“.

Phi-2 MIT license

Microsoft have also been releasing models this week. Phi-2, their 2.7bn paramater “mini LLM” has been released under the MIT license. You can find it as usual on Hugging Face.

Scientific advances thanks to AI

Microsoft made an interesting announcement regarding a collaboration with the Pacific Northwest National Laboratory (PNNL). The teams are working together to accelerate the rate of development in the fields of chemistry and material science. The PNNL are currently testing a new battery material that was discovered in a matter of weeks rather than the usual years thanks to an AI driven High Performance Computer provided by Microsoft. the Microsoft Quantum team identified ~500,000 materials in the space of a few days. This was whittled down to 18 potential materials in the space of just 80 hours. The final candidate has the potential to reduce the required lithium by 70%.

Advances such as these are going to rapidly change the world for the better in many fields. Medical and Pharmaceutical developments are sure to benefit from similar leaps in productivity. More details can be found in the Microsoft feature.

GitHub Trending

The GitHub Trending page is an amazing resource for those looking to play with the latest AI tools and models. For those wondering “What is GitHub?”, GitHub is the central repository many programmers safely store their source code. While you can create private “repo’s”, many programmers work on an “Open Source” basis, whereby they share their code with the world in public repo’s. The great thing about this is that you can try the latest technology from some of the worlds greatest programmers from the comfort of your bedroom.

If you’re feeling brave you can even report bugs and issues, and even suggest modifications to the code. Getting them up and running is not always a simple task (particularly if you are a Windows user!), but with a bit of practice you can soon learn how. With the advent of AI, and simplification of the tools and methods, it’s never been easier to get going. Just remember though, a lot of the code is at an early stage/experimental, so don’t expect a smooth bug free experience. If however you want to be playing with the latest toys several months before the rest of the world, then give it a go.

A few of the trending AI related repos this week included:

https://github.com/joaomdmoura/crewAI

https://github.com/myshell-ai/OpenVoice

https://github.com/jzhang38/TinyLlama

Dev news

Open Interpreter

When GPT-4 introduced Code Interpreter there was widespread excitement at the unlimited possibilities of GPT being able to execute code. While it gave GPT some great superpowers it unfortunately lacks in a few key areas. Of particular concern is the lack of internet access, a limited set of pre-installed packages, 100MB maximum upload file size, 120 second execution time limit, and the fact the state is cleared when the environment closes.

The guys at Open Interpreter decided to solve these limitations and created Open Interpreter. This great tool gives you all the power of Code Interpreter but lets the LLM execute the code locally. This means that full access to the internet is available, and there are no more limitations regards packages, size limits, and execution time. As the code is open source and supported by a community of developers, the ability to expand its abilities and features is much more likely than with the native GPT Code Interpreter which is closed-source and controlled by OpenAI.

You also have a choice of LLM models, including GPT-4 if you wish. At present, you will need to install the package manually following the instructions at their GitHub repo. However there is a desktop app under development, for which you can sign up for early access on their website.

NVIDIA / LlamaIndex collaboration

CES was also used to announce a collaboration between NVIDIA and LlamaIndex. For a while now, developers have been wanting to use local LLM’s during development and testing of their new products and services. This has a number of advantages such as no API fees, uninterrupted access, performance, and data privacy among others.

NVIDIA have released an SDK called TensorRT-LLM. Put simply, this allows LLM’s to be run locally using the consumer grade RTX range of GPU’s. NVIDIA has particularly focused on providing optimised support for the Phi-2, Llama2, Mistral-7B, and Code Llama models.

LlamaIndex is a data framework that is used for simplifying the process of connecting data sources to large language models.

NVIDIA and LlamaIndex have now collaborated to make it easier than ever to get up and running with open source local LLM’s. You can read full details on the NVIDIA Technical Blog. LlamaIndex have also prepared a Colab notebook for those wanting to try it out.

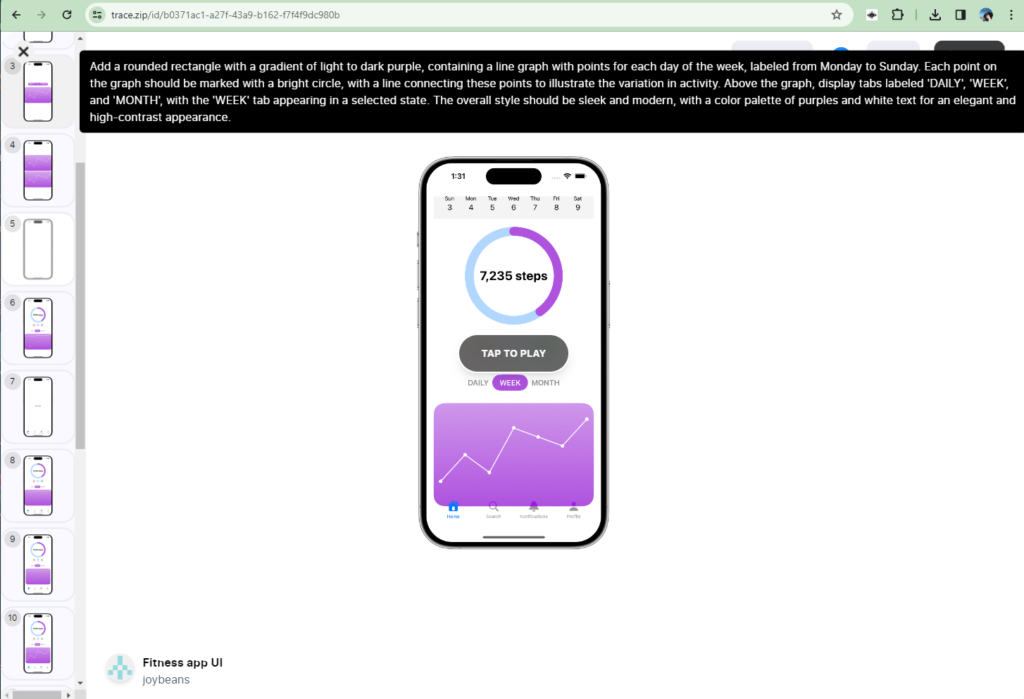

trace

trace is a tool for turning drawings or descriptions of iOS App User Interfaces into functioning SwiftUI apps using the power of AI. It’s far from perfect yet, but is a great start to the field of AI UX/UI design.

M3 Max thermal tip

@ivanfioravanti has pointed out a neat trick to prevent the M3 Max throttling. His tweet explains it best:

Back pages

MoAiJobs

Over the last couple of weeks there have sadly been many layoff’s in the tech world. If you’ve recently found yourself in this position, or are simply looking for an AI focused role, this great new job board is sure to have something for you. All the jobs are AI related in the fields of Engineering, Design, Machine Learning, Research, Marketing, Sales, and Finance. Visit https://www.moaijobs.com/ for your next AI role today.

Advancements in AI video creation

RunwayML are making huge strides in the field of AI video creation. One great example of the current state of the art this week was from Olaf. Through a clever combination of Images from DALL-E3, RunwayML, Pixabay and CapCut, with some cool music by Ashot Danielyan, he’s made this great short movie. How many more months before we start seeing feature length movies?

Births, Deaths and Marriages

In news from Japan, Nikon has announced the launch of a system that uses AI to alert farmers if their cows are about to give birth. At $6,200 per year for 100 cows it doesn’t sound unreasonably priced, probably better value than the twitchers binoculars in fact! Full story at the japan times.

Luckily there don’t appear to have been any significant deaths in the world of AI this week. However the AI Death Calculator has been doing the rounds this week.

Finally, Barsee broke the news that the busiest founder in AI, OpenAI’s Sam Altman has tied the knot with partner Oliver Mulherin in a secret beach-side wedding.

That’s all for this week. To receive this Newsletter to your inbox please subscribe in the links below.

good!!!